Projects in Academy

- Nvidia – BridgeToTurkey Fund, (2023-2024), “Teaching SLAM with Autonomous Robots for Rescue Tasks”, Principal Investigator,

- European Commission Horizon 2020 Marie Skłodowska-Curie Actions Cofund program, (CoCirculation2, TUBITAK 2236), (2021-2023), A Generalized Adaptive Multi-Task Perception System for Mobile Platforms, Principal Investigator.

- BAP (Scientific Research Projects), "FKB-2022-20194", Anomaly Detection in Autonomous Mobile Vehicles 2022-2024, Principal Investigator

- BAP (Scientific Research Projects), "FAY-2022-20118", Derin öğrenme ile yapay zeka ve robotik tabanlı otonom sistem uygulamalarının geliştirilmesi 2022-2023, Research

- Toyota/Inria Project, (2017-2021), Senior Researcher.

- EU Project (European Union), 3rd Hand Robot, (2013-2017), Senior Researcher.

- TÜBİTAK (Turkey Scientific and Technological Research Foundation), " 111E285", Hybrid Mapping and Scene Perception by Comparison Methodology for Multi-Robots, (2012-2013), Phd Student.

- BAP (Scientific Research Projects), "5720", Different Perception Types and Sharing via Communication for MultiRobot Systems 2011-2012, Phd Student.

- BAP (Scientific Research Projects), "09HA210D", Scene Recognition with Multi-Robots, 2009-2010, Phd Student.

- TÜBİTAK "107M240", Scene Recognition, Navigation and Coordination on Mobile Robots based on Attention, (2007-2010), Phd Student.

For Students

- Active Object Tracking on a [Duckie]Drone In this project, you will develop an active tracking algorithm in a real drone. This will be the continuation of a previous projects from 2023 and 2024. The drone is expected to track the object by using sensors such as RGB cameras and then follow the trajectory of the tracked vehicle. The vehicle can be a duckiebot. You will use the Duckiedrone available in the lab.

In the project, you are expected to learn and use ROS for controlling and collecting images, use and learn modern machine learning methods for tracking (such as deep learning).

Good to know: Python.

You will learn about: Drones, simulators, ROS, object detection and tracking.

Level: Bsc, Msc.

Previous projects are as follows: - 3D Lidar based SLAM implementation on a Real Mobile Robot

In this project, you will implement a SLAM algorithm such as https://github.com/zijiechenrobotics/ig_lio on a real mobile robot. The robot has a 3D Lidar, depth camera and it moves with a differential drive locomotion system. You will use and learn ROS (www.ros.org). You will also collect data from the campus at different times of the semester. Outcomes of the previous semester projects : codes 2023 | website 2023, codes 2024 | website 2024.

Good to know: Python.

You will learn about: Mobile Robots, ROS, various algorithms for mobile robots.

Level: Bsc, Msc.

- Remote control and Interface Design for Human-Robot Interaction of a Mobile Robot

In this project, you will develop an interface for a mobile robot. It will have various command options and possible improvements on hardware as well. For previous versions, you can see: RoboVoyager | Git Repo

Good to know: Python.

You will learn about: Mobile Robots, ROS, various algorithms for mobile robots.

Level: Bsc, Msc.

... - Learn to Race on DuckieBots

THE learn-to-Race Framework: You will develop algorithms to complete a race track. As an example, learn-to-Race framework can be checked. You will learn and use Reinforcement learning algorithms with perception algorithms to control the vehicle. You will also use DuckieBots for this task. These tracks are generally parts of other challenges, if the calendars fit, you will have the chance to submit to one of these challenges. All the codes will be written in the simulator. Previous semester studies on the topic: codes | website

Good to know: Python.

You will learn about: Autonomous vehicles, reinforcement learning.

Level: Bsc, Msc or Phd.

- Simulation in CARLA. CARLA is an open-source simulator for autonomous driving research. It has a large community of researchers. Several datasets and benchmarks exist made in CARLA. It is possible to simulate various vehicles, pedestrians, cyclists. Different environments such as highways, urban streets, country-side buildings are available. Conditions such as sunny day, rainy day, snow can be simulated with realistic photos. In this project, the students are expected to create a scenario that will be used as a benchmark for self-driving research. For details, you can contact.

Level: Bsc, Msc or Phd.

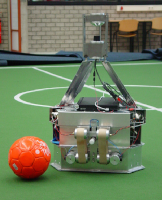

- Goal Kicking Platform (simulator or real-world). In this project, the students will detect the human goal-keeper's skeleton pose, and kick the goal so that it scores a goal. The kicking action can be performed in simulation. The goal-keeper detection and the field will be performed from real images. Further data can be provided from real football clubs.

Level: Bsc or Msc.

...

...

...

...